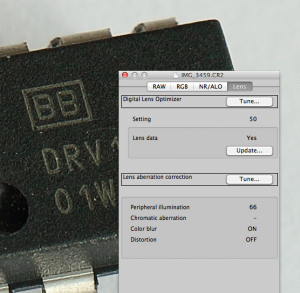

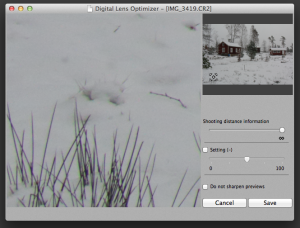

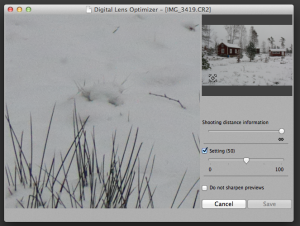

Det handlar om att matematiskt backa den oskärpa som uppstår när ljuset passerar genom objektivets och den ljusspridning som uppstår när ljusstrålarna passerar bländaren, samt också för det gussiska filter som ett aa-filter utgör, detta är fullt möjligt , det är en mycket sofistikerad uppskärpning vi ser som medför att bla brusbilden ökar.

Emil Martinec har beskrivit det så här

For the more technically inclined, a more detailed explanation is that a good way to think about imaging components is in terms of spatial frequencies; for instance, MTF's are multiplicative -- for a fixed spatial frequency, the MTF of the entire optical chain is the product of the MTF's of the individual components. So if the component doing the blurring has a blur profile B(f) for as a function of spatial frequency f, and the image has spatial frequency content I(f) at the point it reaches this component, then the image after passing through that component is I'(f)=I(f)*B(f). Thus, if one knows the blur profile B(f), one can recover the unblurred image by dividing: I(f)=I'(f)/B(f). The problem comes that B(f) can be small at high frequencies, since it is a low pass filter that is removing these frequencies from the image. Dividing by a small number is inherently numerically unstable, and so choosing the wrong blur profile, or having a bit of noise in the image, all those inaccuracies get amplified by the method. So in practice one includes a bit of damping at high frequency (quite similar to the 'radius' setting in USM) to keep the algorithm from going too far astray.